I’ve been using my nifty terminal helper to write the bash commands that my brain refuses to remember. It’s quick and accurate (enough); the best tool yet I’ve built using an LLM.

The only downside is its reliance on the Claude API, which means it’s useless without an internet connection and a credit card.

But can we get another version to run locally? No APIs, no tech companies leaking our data; just our own little AI, on our own computer.

In short: yes. But we need to be clever about it.

🛠 Click here to jump straight to the HOW-TO 🛠

Super Models🔗

A quick refresher on the basics, first.

‘AI’ bots like ChatGPT and Claude are powered by Large Language Models (LLMs). The margins of this blog are too small (and its author insufficiently STEM’d) to contain a full explanation of how these work, but essentially they’re programs for predicting patterns of words.

These predictions are made based on parameters drawn from massive bodies of text. Us internet-connected humans have been merrily churning out text to the Web for decades, and the LLMs ingest this to ‘learn’ what words usually come after each other.

Hoover fast and break copyright.

Hoover fast and break copyright.

Models differ from one another in their training data and their weightings: what stuff the LLM has read and what it attaches importance to. Some models lean more towards coding-related data, others are trained on specialist material to suit their use-case.

In the beginning, bigger was better: the more data you hoovered up, the more parameters your model could contain and the better its responses could be. OpenAI’s GPT-2 contained 1.5 billion parameters when it was released in 2019; by 2024, their GPT-4 model had somewhere north of 1.4 trillion.

The problem with such massive models is that they need similarly colossal hardware to actually run. There’s a reason Nvidia is now the most valuable company on the planet: in the AI gold-rush, they sell the biggest shovels. Shovels that sell for $40,000 and use more power than a house.

Somehow, I don’t think my 9-year old Thinkpad is quite going to cut it with a top-end model.

Small is beautiful🔗

Although the mega-models tend to get the headlines, there have been some fascinating developments down at the other end of the scale, too. Microsoft’s Phi and Google’s Gemma models are - amongst others - tailored to use a much smaller sets of parameters, to deliver usable performance on much skimpier hardware.

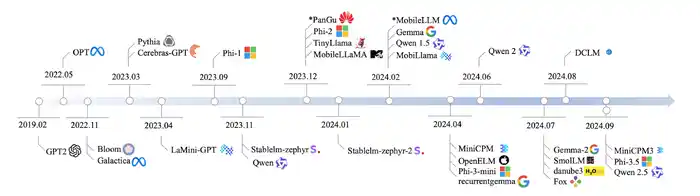

A timeline of Small Language Models

A timeline of Small Language Models

The thinking here is that lots of AI tasks can actually be handled using smaller models, running directly on users’ devices, rather than having to send requests off to energy- (and capital-) hungry data centres full of chuntering H100s.

And they’re getting pretty good.

Yes We Qwen🔗

I’ve tinkered with Phi and Gemma. While both are fairly capable, they’re still a little on the large side. My poor X1 isn’t overflowing with RAM - and lacks any discrete GPU - so every MB counts.

The latest and smallest Qwen-coder model, on the other hand, is positively minute: a mere 500MB! That’s half the size of GPT-2, but massively more coherent and capable.

Just don’t ask about Winnie The Pooh

Just don’t ask about Winnie The Pooh

It won’t write beautiful sonnets or fall in love with journalists, but it will pump out passable commands at a snappy pace, with a minimal RAM footprint.

Let’s give it a whirl.

How To 🛠🔗

1) Install Ollama🔗

Ollama is the go-to software for quickly downloading and running different models. They’ve an extensive catalogue of pre-packaged LLMs that can be pulled with a single command, and a rudimentary chat interface on the command line. I tend to stick with the CLI version, but you can also set up OpenWebUI for a nice front-end.

You can download the latest version of Ollama here.

2) Download the model🔗

There are plenty of small language models to choose from. My current preference is for Alibaba’s Qwen2.5 Coder model, clocking in at a tiddly 0.5b parameters. Your mileage may vary, so do poke around the catalogue, and perhaps try Gemma or Phi.

To download the model, run:

ollama pull qwen2.5-coder:0.5b

3) Write the Modelfile🔗

This Qwen model is already weighted towards coding queries, but we want to further refine it to suit our use-case of generating Bash commands.

To do this we make a Modelfile, a set of instructions to tweak the model towards our needs (my Modelfile is here, which you can copy and paste). First, we specify which model we’re basing our tweaked version on:

FROM qwen2.5-coder:0.5b

Then we set the most important part of the Modelfile, the system prompt:

SYSTEM "You are an excellent assistant called CAI. You specialise in generating Bash scripts and commands for use on Unix systems. Respond only with the correct command for the given query. Do not provide any commentary, only respond with the command."

Given how small our model is already, we want to keep this as brief as possible. From trial and error, I’ve found this to mostly keep CAI on task with delivering one-liners.

Finally, we set the temperature - where on the creative/consistent spectrum we want the model to fall:

PARAMETER temperature 0.7

You can also set other parameters like the size of the context window, but this will do for now.

4) Run the new, local assistant🔗

With the Modelfile prepared, we can now ask Ollama to build the new model. We’ll call it cai:

ollama create cai -f /path/to/Modelfile

Once it’s created, we can now start running it. To ask the model a question, simply use the ollama run cai, followed by the query.

Integrating that with my little cai shell wrapper, we end up with:

Practically instant, and certainly quicker than an API call

Practically instant, and certainly quicker than an API call

And there we have it: a fully local, fully offline, fully free command-line assistant. One that won’t fry a knackered Thinkpad.